¶ Using AVR with n8n (AI Workflow Integration)

Integrating AVR with n8n allows you to build AI-powered voicebots with visual workflows and direct integration with AVR.

This integration is powered by the avr-llm-n8n connector.

¶ Environment Variables

| Variable | Description | Example Value |

|---|---|---|

PUBLIC_CHAT_URL |

Your n8n public chat workflow endpoint | https://your-n8n-instance.com/webhook/chat |

PORT |

Port where the AVR n8n connector will listen | 6016 |

Replace your_n8n_public_chat_endpoint with your actual n8n public chat workflow URL.

¶ Example docker-compose (AVR n8n connector)

avr-llm-n8n:

image: agentvoiceresponse/avr-llm-n8n

platform: linux/x86_64

container_name: avr-llm-n8n

restart: always

environment:

- PORT=6016

- PUBLIC_CHAT_URL=$PUBLIC_CHAT_URL

networks:

- avr

¶ Example with local n8n

In the avr-infra project, you can find a complete example of how to integrate n8n with AVR.

The compose file includes both the AVR n8n connector and a local instance of n8n:

avr-n8n:

image: n8nio/n8n:latest

container_name: avr-n8n

environment:

- GENERIC_TIMEZONE=Europe/Amsterdam

- NODE_ENV=production

- N8N_SECURE_COOKIE=false

ports:

- 5678:5678

volumes:

- ./n8n:/home/node/.n8n

networks:

- avr

If you already have an n8n installation (either in the cloud or on another server), you can comment out the avr-n8n section.

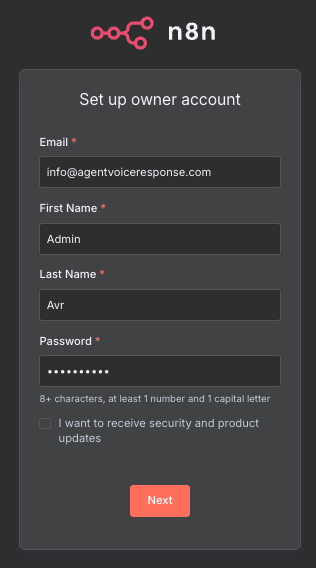

If you use the local installation, first create an account by providing email, first name, last name, and password, then continue with the setup below.

¶ Step-by-step Setup AI Voicebot

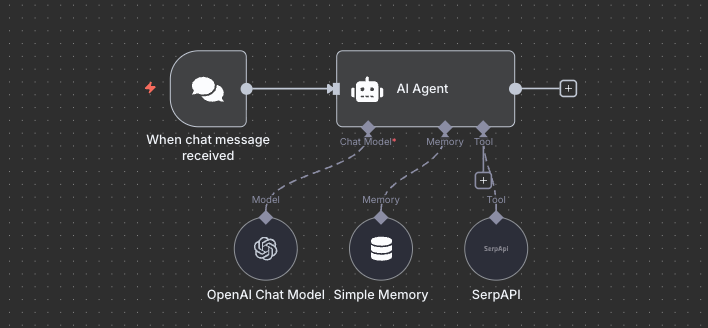

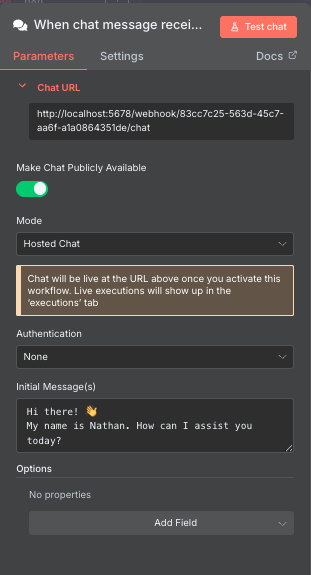

¶ 1. Start with a Chat Trigger

In n8n, create a new workflow with a Chat Trigger node.

Enable “Make Chat Publicly Available” and copy the Chat URL to use in PUBLIC_CHAT_URL.

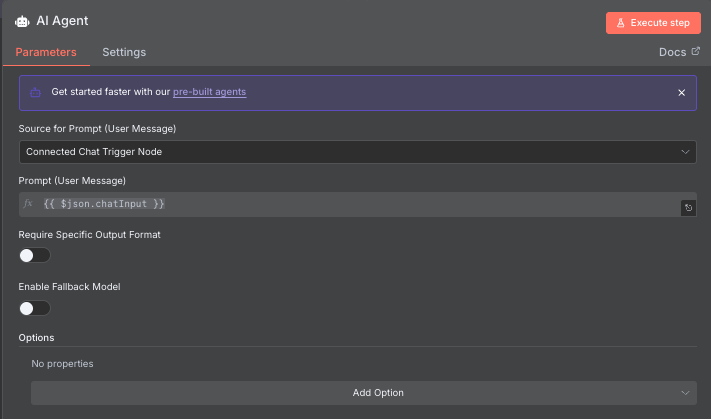

¶ 2. Connect the Chat Trigger to an AI Agent node

Choose whether to use a Conversational Agent or a Tools Agent depending on your workflow needs.

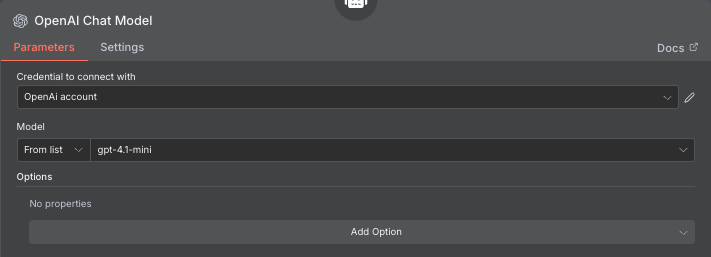

¶ 3. Add your Chat Model

Insert an AI Chat Model node (e.g., OpenAI, Anthropic, etc.).

Configure temperature, max tokens, and model according to your application.

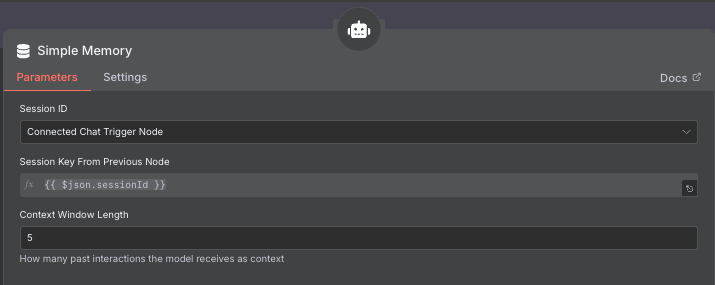

¶ 4. Enable Memory

Add a memory node (e.g., buffer memory) for context-aware conversations.

Use the chat session ID from the Chat Trigger as the session key.

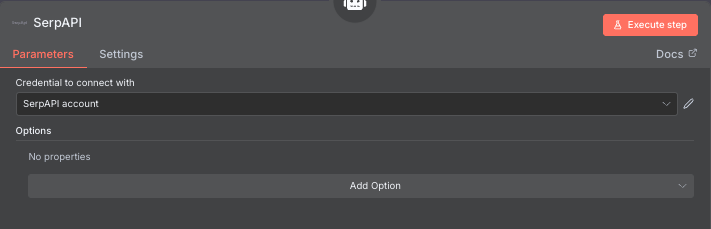

¶ 5. Add Tools like SerpAPI

Extend your workflow with additional nodes like SerpAPI, databases, or custom APIs.